Why using this feature

- Secure: Prevents unauthorized data access.

- Compliant: Protects sensitive information.

- Efficient: Adds fine-grained access control to RAG.

How it works

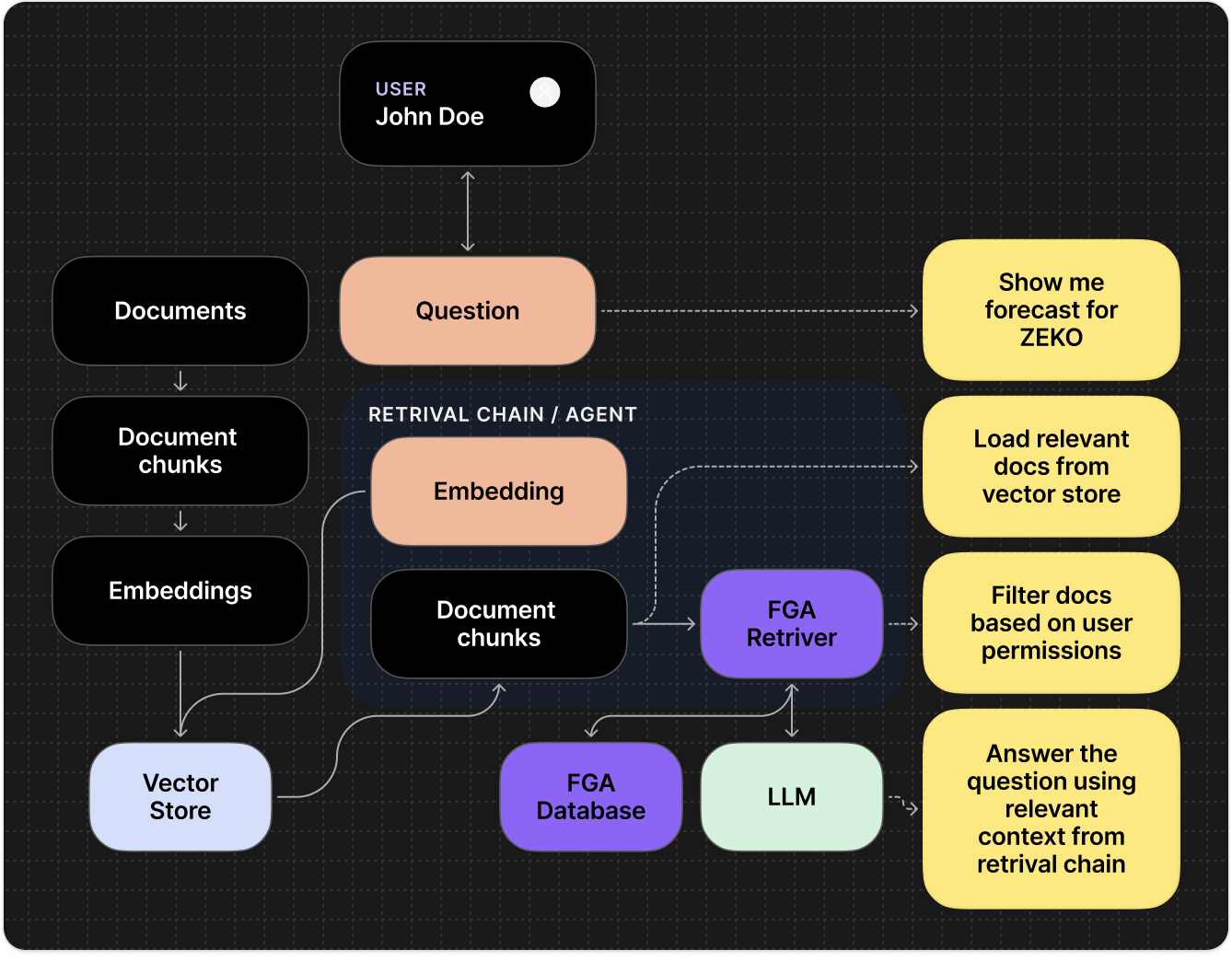

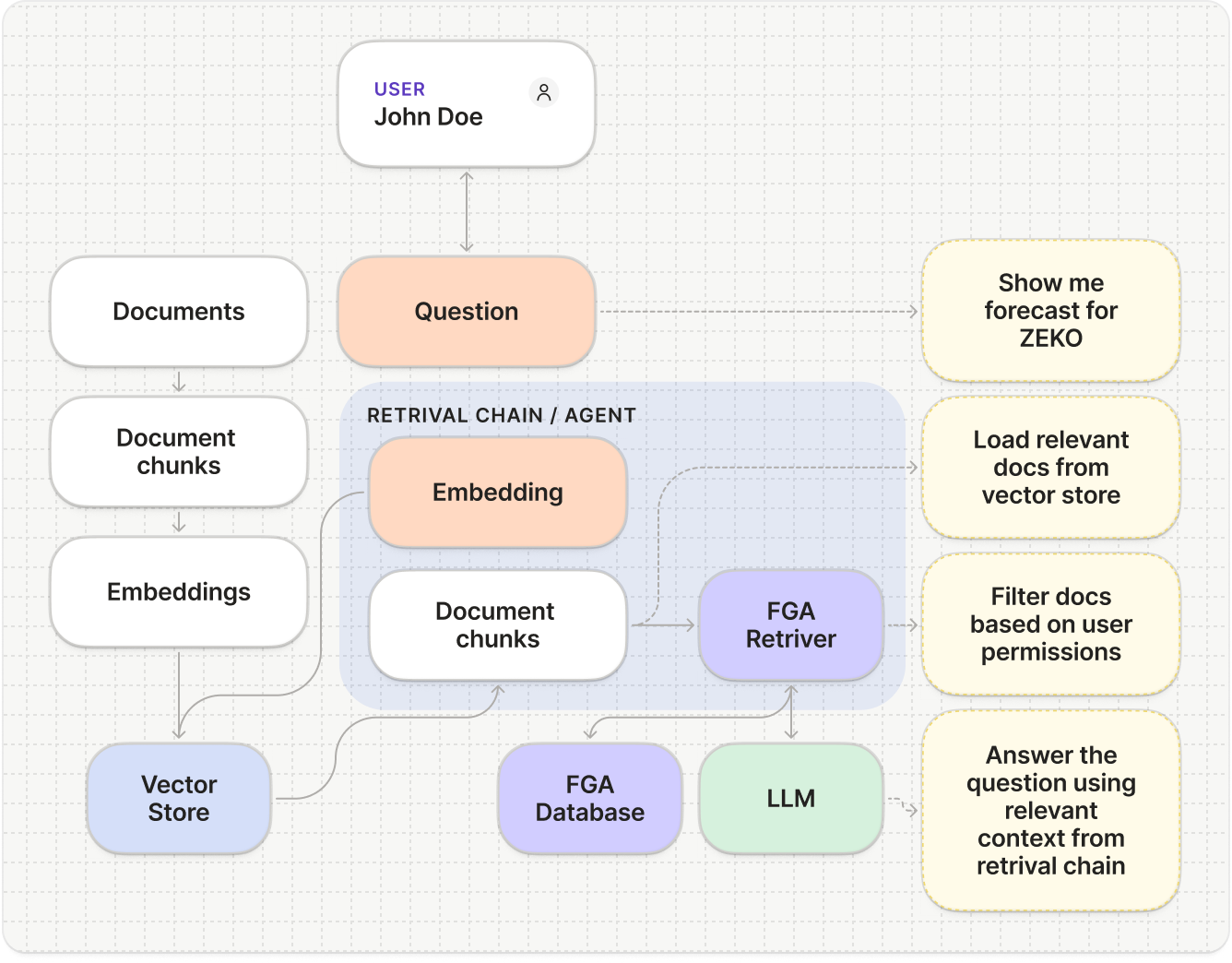

Generative AI apps and agents often need to ground responses in retrieved documents—for example:- A user asks for a forecast based on sales data.

- Summarize internal reports or policies.

- Pull insights from product or customer docs.

The challenge: Securing data in RAG pipelines

Retrieval-Augmented Generation (RAG) is a powerful technique that enhances Large Language Models (LLMs) by providing them with relevant, up-to-date information from external data sources, such as a company’s internal knowledge base or document repository. However, without proper access controls, a RAG pipeline could retrieve documents containing sensitive information (e.g., financial reports, HR documents, strategic plans) and use them to generate a response for a user who should not have access to that data. This could lead to serious data breaches and compliance violations. Simply filtering based on user roles is often insufficient for managing the complex, relationship-based permissions found in real-world applications.The solution: Auth0 Fine-Grained Authorization (FGA)

To solve this challenge, Auth0 for AI Agents uses Auth0 Fine-Grained Authorization (FGA). Auth0 FGA is a flexible, high-performance authorization service for applications that require a sophisticated permissions system. It implements Relationship-Based Access Control (ReBAC) to manage permissions at large-scale. Auth0 FGA is built on top of OpenFGA, created by Auth0, which is a CNCF sandbox project. Auth0 FGA allows you to decouple your authorization logic from your application code. Instead of embedding complex permission rules directly into your application, you define an authorization model and store relationship data in Auth0 FGA. Your application can then query Auth0 FGA at runtime to make real-time access decisions.Fine-Grained Access in RAG

Integrating Auth0 FGA into your RAG pipeline ensures that every document is checked against the user’s permissions before it’s passed to the LLM.

1

Authorization model

First, you define your authorization model in Auth0 FGA. This model

specifies the types of objects (e.g.,

document), the possible

relationships between users and objects (e.g., owner, editor, viewer),

and the rules that govern access.2

Store relationships

You store permissions as ‘tuples’ in Auth0 FGA. A tuple is the core data

element, representing a specific relationship in the format of

(user, relation, object). For example, user:anne is a viewer of

document:2024-financials.3

Fetch and filter

When a user submits a query to your AI agent, your backend first

fetches relevant documents from a vector database and then makes a

permission check call to Auth0 FGA. This call asks, “Is this user allowed to

view these documents?”. Our AI framework SDKs abstract this and make it as

easy as plugging in a filter in your retriever tool.

4

Secure retrieval

Auth0 FGA determines if the user is authorized to access the documents. Your

application backend uses this data to filter the results from the vector

database and only sends the authorized documents to the LLM.